AMPLIFY VOL. 37, NO. 5

Conversations about AI are unavoidable because the potential benefits of AI adoption are unprecedented, particularly for organizations. Generative AI (GenAI) allows firms to increase employee productivity, streamline internal processes, and enhance customer-facing ones. It has the potential to create new avenues for value generation by fundamentally transforming the way firms generate data and interact with it.

GenAI provides a conversational interface to query data sets and generates easily interpretable outputs, letting those without technical expertise interact with data. This creates new possibilities for leveraging data and improving decision-making. These benefits make GenAI a true game changer, helping companies derive business value from existing and emerging data.

However, these boons are not without banes. Debates over GenAI include issues common to most technology development debates, such as job displacement and ethics. But this debate also deals with issues distinctive to AI such as intellectual property, algorithmic bias, and information reliability.

With analytics now an indispensable component of value generation, the issue of information reliability has become critical. So it’s no surprise that AI hallucination (when GenAI produces nonsensical or inaccurate output) has recently taken center stage. The public learned that ChatGPT erroneously claimed a law professor had sexually harassed a student in a class,1 Google’s Gemini generated images deemed to be “woke” that were historically inaccurate,2 and Meta’s Galactica was shut down because of inaccuracies and bias.3

Blind reliance on fabricated data from these hallucinations can have severe consequences. Blind reliance on any information source is never advisable, and ensuring data accuracy is fundamental in any analytical process. Ensuring data reliability is particularly challenging when GenAI presents fabricated information as factual, often with limited transparency about the source. AI hallucination is especially problematic for novice users who lack the technical expertise to verify such data.

Some argue that it’s time to focus on GenAI’s value potential, but concerns about its use continue and should not be overlooked, especially when developing adoption strategies. So we must ask: “To what extent is AI hallucination acceptable?” and “How much room for error are we willing to tolerate?” These are not easy questions, but they are essential to navigating the path forward with GenAI.

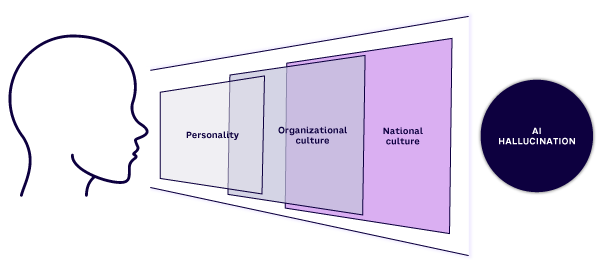

The degree to which such hallucinations are tolerated is contingent on context and the circumstances surrounding the usage. But how does context come into play? And what does it mean at various levels of analysis? Figure 1 illustrates the contextual lenses — national culture, organizational culture, and personality — in which we can evaluate tolerance to AI hallucination at different levels of analysis (i.e., societal, organizational, and individual).

AI Hallucination Through the Lens of National Culture

At a societal level, context comes from national culture. When seeking to understand tolerance for hallucinations, it is unlikely that a global consensus can be reached, making understanding national cultures instrumental in assessing societies’ tolerance levels.

One cultural aspect particularly useful in understanding the differences in tolerance to AI hallucination is how comfortable individuals are with uncertainty and ambiguity. Individuals in societies that are comfortable with uncertainty in their daily lives may be more tolerant of hallucinations; those who are highly risk-averse may be less tolerant.

Another aspect that affects tolerance to hallucinations is how a society values short-term and long-term outlooks. Societies with long-term orientations are more likely to tolerate AI hallucinations by acknowledging the potential future value of embracing GenAI. Conversely, societies with short-term orientations may be hesitant to adopt GenAI tools, given the immediate negative consequences we’ve seen from hallucinated outcomes.

The EU Artificial Intelligence Act aims to regulate AI contingent on the risk these systems represent. Importantly, it reflects the notion of disparate perspectives on AI use among societies by regulating its use for specific applications deemed unacceptable in the EU but acceptable in countries with diverging cultural stances (e.g., social-scoring systems like those used in non-Western societies).4

The AI Act reinforces the idea that the circumstances encompassing the use of AI interact with national culture to determine a society’s tolerance of error. It categorizes AI systems according to their risk to regulate their use, such as prohibiting systems that deceptively manipulate behavior, leading to significant harm. This suggests that although fabrications may be viewed differently due to contextual and cultural cues, the magnitude of the consequences of relying on AI-generated information drives what we consider tolerable.

AI Hallucination Through the Lens of Organizational Culture

At the firm level, we can better understand tolerance to AI hallucination by looking at organizational culture. All firms are unique, but similarities or differences among them help define various cultures.5 Some organizations are more open to embracing change and adopting new technologies; this may come at the price of uncertainty and newfound error sources. Accepting that markets are dynamic and constantly changing stems from a culture of flexibility and adaptability, so these organizations may be more likely to adopt GenAI and be more tolerant of hallucinations.

Other organizations are not as open to change. This may come from certain aspects of a firm’s culture, such as a focus on processes. That focus helps with productivity, but it may come at the expense of adaptability. Organizations with this type of culture may not be as tolerant of hallucinations (adherence to processes could hinder flexibility in navigating GenAI’s limitations).

As our reliance on data for decision support increases, so will the situations in which GenAI can be useful. However, not all situations have the same stakes in terms of errors, and companies with diverging cultural stances are likely to react very differently.

Google’s Gemini “woke” responses clearly illustrate the importance of the stakes surrounding GenAI use.6 This could have been viewed as an error in processing and output, but many found the historical inaccuracies and biases intolerable. This led to a serious hit to Google’s reputation as a tech leader.

AI Hallucination Through the Lens of Personality

Societal and organizational views can give us important insights into AI hallucination tolerance, but to understand this phenomenon, we must look at the end user.

Fortunately, personality can help us assess individual beliefs about the acceptability of AI hallucinations. Individuals who are neurotic may be less tolerant of AI hallucinations, for example, given their susceptibility to anxiety. Individuals who tend to be open to new experiences may be willing to tolerate certain levels of hallucination.

We must consider the circumstances in which people engage with AI systems alongside personality. Consider the example of the law professor wrongly accused by AI of sexual harassment. This could be viewed as an error in the system, but the stakes raise our level of intolerance. That is, an output error is unacceptable when someone’s career and reputation are on the line.

As organizations operate within societal dynamics and individuals engage within organizations, these contextual factors interact at a higher level of abstraction. They should not be considered in isolation; rather, we must look at them holistically to understand how we react to hallucinations and how we should interact with GenAI systems.

Should You Care About GenAI Hallucinating?

Amid the ongoing debate about the potential benefits of GenAI versus possible areas for concern, there are decisions to be made. GenAI is here to stay, and lingering on the debate stage without acting will set us back as the market moves forward.

As discussed in this article, competing factors come into play to determine our tolerance to AI hallucination, so a “Here’s what you should do” statement addressing this phenomenon is not the answer. We believe that understanding the context is crucial to making informed decisions about strategically adopting and implementing emerging technologies. This understanding can help us balance the potential value of the opportunity with our risk tolerance.

Here are several key takeaways:

-

Consider the national context around your firm’s operations. What regulations are in place to guide GenAI’s adoption and use? Do they align with your organization’s culture, or do they require a cultural shift? Are there practices in other countries that closely resemble your own that you could adopt?

-

Consider where your firm falls within each cultural dimension to determine how accepting your company is of new systems and its tolerance to AI errors.

-

Get to know the individuals that make up your organization; many have probably informally adopted these technologies. Consider their experiences and personalities when devising adoption strategies that align with their current practices.

-

Establish clear regulations that encourage transparency when AI-generated data is being leveraged for decision-making, but ensure their enforcement aligns with your organization’s culture and your employee’s personalities.

-

Foster education on GenAI use and its potential pitfalls to promote a culture of responsible use.

We must not dismiss the issues surrounding AI hallucinations. The potential benefits of GenAI are virtually endless, but the repercussions from misuse or blind reliance on its output can be highly detrimental. The good news is that a balance is possible. Key to that balance are: (1) having context at the fore of your adoption strategies, (2) accounting for tolerance levels, and (3) mitigating the risk of misuse while capitalizing on the many opportunities this technology offers.

References

1 Verma, Pranshu, and Will Oremus. “ChatGPT Invented a Sexual Harassment Scandal and Named a Real Law Prof as the Accused.” The Washington Post, 5 April 2023.

2 Zitser, Joshua. “Google Suspends Gemini from Making AI Images of People After a Backlash Complaining It Was ‘Woke.’” Business Insider, 22 February 2024.

3 Heaven, Will Douglas. “Why Meta’s Latest Large Language Model Survived Only Three Days Online.” MIT Technology Review, 18 November 2022.

4 “EU AI Act: First Regulation on Artificial Intelligence.” European Parliament, 8 June 2023.

5 Gupta, Manjul, Amulya Gupta, and Karlene Cousins. “Toward the Understanding of the Constituents of Organizational Culture: The Embedded Topic Modeling Analysis of Publicly Available Employee-Generated Reviews of Two Major US-Based Retailers.” Production and Operations Management, Vol. 31, No. 10, August 2022.

6 Zitser (see 2).