AMPLIFY VOL. 37, NO. 12

Between now and 2030, we will undoubtedly see a transformation in AI adoption and application — but it is not clear which way this transformation will go. The huge advances made in the field in recent years are well-known, but they are not necessarily well-understood.

Anxiety is building, not just over AI’s legal, ethical, environmental, and social implications, but over whether it will yield the productivity gains so eagerly anticipated. Despite the rise to public prominence of generative AI (GenAI), especially since the 2021 release of ChatGPT, no significant rise in worker productivity has been observed.1 In fact, a recent Upwork study found that 77% of employees believe AI has made them less productive.2

The Gartner Hype Cycle is well established: initial excitement at a new technology’s potential leads to overinvestment, followed by a corrective crash that triggers consolidation and, in due course, more sustainable growth. AI may be entering the middle phase of this cycle. However, there are many examples of technologies that defied the cycle, either by sinking without a trace or continuing an unabated rise to success. The future of AI could go many ways.

Our struggles to convert AI into real-world gains suggest that the decisive challenge for the immediate future of AI is its successful integration into the tasks that skilled and professional people perform. According to the Upwork study, many executives feel their workers lack the skills to take advantage of the technology. Meanwhile, employees argue that time taken up checking unreliable AI output is a productivity killer.

Massachusetts Institute of Technology (MIT) economist David Autor graphically highlighted the vastly different value technologies can have in trained and untrained hands: “A pneumatic nail gun is an indispensable time-saver for a roofer and a looming impalement hazard for a home hobbyist.”3 AI cannot currently be expected to bridge all gaps in skill, experience, or data management within an organization.

Multiple Amplify contributors have called for an inclusive, humanistic approach to AI adoption.4,5 We believe that leaders looking to successfully integrate AI would benefit from undertaking a deep, widespread analysis of precisely what contribution AI can make to the most pivotal roles and skills in their organization. This is not only important for maximizing the positive value of AI; we argue that, in the face of wholesale automation of roles, a conscious effort is needed to maintain the skills and professional development ecosystem necessary to produce humans capable of adopting and exploiting AI in the future.

The Decomposition of Professions

Society’s treatment of skill and expertise has been evolving in parallel to AI developments. In their seminal 2015 work, The Future of the Professions, Richard and Daniel Susskind proclaimed the wholesale decomposition of the professions as both socially desirable and already underway.

They argue that the expert craft of a traditional professional can increasingly be abstracted and encapsulated as logic and processes. These can be implemented, perhaps under expert direction, by paraprofessionals using standard operating procedures (SOPs) or a range of digital applications, with the result being faster and more affordable service delivery. Ultimately, they ask, why place expertise in people rather than in things or rules?6

The Susskinds call for work to be analyzed as tasks rather than jobs to better pinpoint where human expertise is truly required. They call for professional training to be structured less around the technical details of tasks and more on the fundamental problems and values of the relevant sector. Similar to a craft apprenticeship, this should also foster familiarity with the complex interactions of experienced human operators. It certainly should not consist of years of repetitive experiences working at a given profession’s coalface.

Increasingly, in place of direct delivery, work will be about tool adoption, process analysis, results explanation, and dynamic integration with other areas of expertise. Education and training should adapt accordingly.

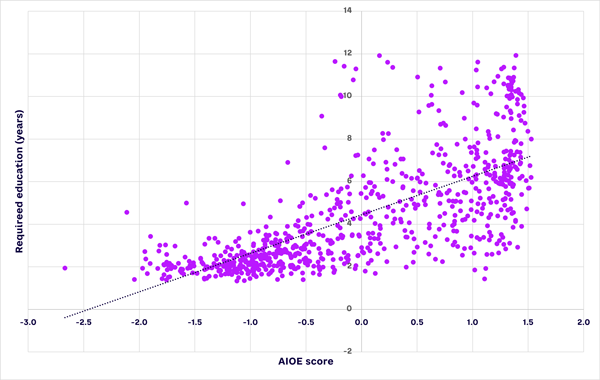

AI’s acceleration renders the issues the Susskinds raise even more urgent. Breakthroughs in GenAI have put professional roles requiring a high level of education at the forefront of automation for the first time. Figure 1 offers a snapshot of occupations’ exposure to AI (AIOE score) mapped to required education.7

In addition to offering a useful holistic model for considering the future of expertise, the Susskinds offer a lesson on the difficulties of looking ahead amid innovation and change. They were writing before the invention of the transformers architecture, which was pivotal to recent AI advances, allowing them to offer a prescient examination of issues and trends without foreseeing that one of the factors they analyzed would become a major driver in the medium term.

This illustrates the importance of taking a broad, circumspective view when considering technological innovation, which can often only be understood a posteriori, as we know from scientist/futurist Roy Amara:

Amara’s Law: We tend to overestimate the effect of a technology in the short run and underestimate the effect in the long run.

The Marriage of Techne & Episteme

When we consider possible future models of AI-human interaction, a complex picture emerges. It has become commonplace to suggest AI will mainly automate work that is possibly meticulous but ultimately routine and well-understood. Examples include writing code for a programmer, drafting a document for a lawyer, and suggesting plausible candidate molecules to a pharmacologist.8-10

There may be applications in which AI plays the role of co-expert. For example, the pharmacologist just mentioned may be required by their organization to be familiar with a formidable library of documentation and SOPs. These are important, but they are ancillary to the core science being delivered. With its powers of summarization and information retrieval, a large language model (LLM) could function as a responsive guide to this entire domain of knowledge, capable of applying it to specific situations as they arise. For example, at least one trial has shown that AI-driven knowledge bots trained on product manuals and troubleshooting history can successfully support software engineers.11

Some roles might radically shift in emphasis, but this will not necessarily result in less skill being required from a human overall. A report from design toolmaker Figma suggests that in response to the plethora of options AI will generate, the role of a UI/UX designer will pivot to selection, justification, and persuasion over actual design.12

Design will also become far more data-driven, as user interactions with candidate designs can be simulated by AI at a scale rarely possible via human user acceptance testing.13 Google was a noted early adopter of design by big data; in 2009, it was famously excoriated for taking this to excess by departing design lead Doug Bowman. This is a likely direction for many roles oriented around design in a broader sense, and, when kept in proportion, it could be enriching for the activities involved.

Indeed, AI solutions may end up taking on so many tasks relevant to a role that the human’s main task becomes selecting the right model for each task and coordinating between them. In the clinical trial situation mentioned above, the SOPs might be accessible via an LLM while a model like AlphaFold identifies the molecules for the drug being trialed and another model scans the horizon for threats to the trial.14

Finally, AI itself will generate requirements for skills. These will not just include skills required to directly interact with AI, such as prompt engineering; they will also include extensions to existing services. For example, although cybersecurity has a lot of potential for AI-driven automation, AI applications themselves pose distinctive cybersecurity challenges with which cybersecurity professionals will have to come to grips.

This is a relatively optimistic vision of the future, in which skilled workers, augmented by AI, can focus on the fundamentals of their profession and adjacent synergies. In his 2004 book, The Gifts of Athena, economic historian Joel Mokyr argued that a key factor in the Industrial Revolution was the institutionalized and highly productive interplay of episteme and techne, which can be broadly defined as scientific knowledge of immutable truths and practical experience of messy realities. When they inform each other, the result is growth and progress.15

Respective AI and human capabilities cannot be easily mapped onto episteme and techne. AI is trained on massive banks of data (which can be thought of as experience); it then generalizes and pattern matches. The human’s role is not purely intellectual: they might have a deep understanding of the context that the AI does not, but they must take practical steps to use AI’s outputs in the real world.

Nonetheless, one can see the same interplay at work in emerging human-AI interactions, which can all be thought of as translating abstract knowledge into real outputs and vice versa.

The End of Skill Scarcities?

A key insight from Mokyr is that such an interplay is not organic. In the past, it required conscious organization. More ominously, Yuval Harari, in Nexus, his recent work on AI in the context of the history of information networks, counsels against comparisons of the nascent age of AI with the Industrial Revolution.16 The impact of AI could be vastly more profound, precisely because AI poses an unprecedented challenge to the human monopoly on thought (albeit without actually thinking).

Furthermore, the grim human ramifications that can be traced back to the Industrial Revolution (colonialism, economic inequality, and environmental destruction) do not make the comparison a sound basis for complacency, even if valid. History should motivate and inform our actions, not provide glib reassurance as to what comes next.

Few deny that AI adoption will lead to major readjustments in the labor market. Some roles may be entirely automated. Even those that are augmented may require far less skill. On the one hand, they will become radically democratized; on the other, remuneration and job security will diminish.

In terms of which roles will be most impacted, studies tend to identify white-collar occupations requiring a high level of education with an emphasis on information processing (refer back to Figure 1). According to the World Economic Forum, the roles most likely to be automated entirely are those that consist of “routine and repetitive procedures and do not require a high level of interpersonal communication.”17 We believe nonroutine roles that focus heavily on a particular craft, resist decomposition, and lack active contextual involvement are also at high risk. For example, drafting documents is only one aspect of a lawyer’s work, but translation is the defining activity of a translator.

This poses a challenge. Human experts will still be needed, but the incentives for individuals to amass that expertise will be reduced. Translators are already experiencing downward pressure on earnings due to GenAI.18 Concern is expressed about those starting out in the profession, as automation reduces the opportunities to gain direct experience.19 At the same time, experienced translators are in demand to check AI translations.

In an echo of the disconnect over AI’s utility identified in the Upwork study cited above, translators say this kind of task is more painstaking and time-consuming than fresh translation, but purchasers perceive it not to be worth as much. The techne has been automated, the human episteme built up through it is still needed, and the relationship between the two is poorly understood by decision makers.

Similar issues played out in Hollywood during the 2023 Writers Guild of America strike. One of the main issues was the alleged plan by studios to generate initial screenplays using AI and bring in writers as editors.

With the techne automated, will we eventually reach a point where there are not sufficient humans with the necessary experience to provide the required episteme and oversight? The Susskinds describe this risk to the skills ecosystem as a “serious but not fatal” challenge to the decomposition of the professions that they urge.20 They argue that prolonged exposure to automatable tasks early in one’s career does not constitute high-quality training anyway and propose the alternative model summarized above.

Their model may not be commercially realistic, however. In many career paths, early career professionals undertake easily automatable tasks in parallel to gaining experience they will later use to undertake more complex tasks. In a typical professional services firm, a junior professional performs tasks like drafting, simple coding, or data analysis while gaining experience in project management, software architecture, or client relationship management, which will be their focus later in their career. If AI is handling the menial tasks, it will become more difficult to justify the expense of including juniors on projects to clients if the juniors are blatantly there just to build up the experience required for more senior, nuanced roles at some future point.

We are at a happy historical juncture, in which both powerful AI and independently experienced professionals are available, but if AI is not deployed thoughtfully and responsibly, this will not always be the case. Leaders should consider the longer-term sustainability of their skills ecosystem, including which skills are going to be needed.

It is difficult to tell what skills will cease being needed. Currently, the best results from many tasks are achieved through a collaboration between AI and humans. As noted, publishers and Hollywood studios appear far from ready to dispense with human input. Computer-assisted translation tools, which can have an AI component, are well-established in professional translation. AI has proved superior to medical professionals in some diagnosis tasks when compared head-to-head, but the best results are reported from the blending of human and AI observations.21

In software development, AI-powered copilots increase productivity but still introduce bugs, struggle to fully optimize code, and do not perform as well on more advanced problems.22 One also needs to know exactly what to ask for from the AI and how to assemble and deploy the outputs. If we draw an analogy between the AI-augmented developer of the future and the software architect of the present, the consensus in the tech world is that the latter should still know how to code, to better inform their decision-making, even if they delegate the bulk of the actual coding.

One of the Susskinds’ flagship examples of the automation of professional work is the rise of online dispute resolution (ODR) systems, which were pioneered by eBay in the late 1990s and are now being augmented with AI. ODR offers parties in high-volume, low-value transactions quick and cheap access to justice without direct lawyer involvement. Yet lawyers and professional mediators have typically been involved in the design and evolution of ODR systems. The technology meets a need that quite possibly would never have been met other than by automation, thanks to the pipeline of human experts continuing to flow.

One could argue that augmentation is an intermediate stage and that AI will become fully autonomous in the future. From a technical perspective, this is highly contentious. Even the latest, massive LLMs are highly dependent on their training data and not reasoning independently at all.23 To improve, LLMs will need more training data of sufficient quality, and that data will be produced by human experts, broadly defined. LLMs rapidly degrade in quality (so-called model collapse) when trained on the output of other LLMs because they amplify subtle patterns within machine-generated data.24

Thus, the deskilling of humans can be seen as posing a threat not just to society and the benefits of AI, but to AI itself. The entire AI sector relies on humans continuing to produce content and interactions on which it would be worth training a model at the scale they have hitherto done, if not greater, despite the potential impacts of AI on skilled work. All in all, there is little to suggest that human expertise will not continue to be needed, especially if AI is to thrive rather than survive.

The Future AI-Human Ecosystem

AI has enormous potential for good and for ill. Looking to 2030, if history is to bend toward the former, it will require careful, conscious management of the skills ecosystem within organizations and industries.

The notion of the decomposition of roles into tasks is useful, as this supports nuanced analysis of what can be automated, what can be augmented, and where human expertise is required. Furthermore, the wider sustainability of that human expertise must be carefully considered, and steps taken to actively maintain it, if it can be established that this expertise is critical to the continued improvement and realization of AI’s potential.

We suggest leaders ask themselves the following:

-

What tasks can be augmented by AI, and what tasks can be automated?

-

Are workers currently gaining skills and experience from, or in parallel to, these tasks that may still be needed, meaning measures will be required to retain these skills by alternative means?

Interestingly, AI could offer solutions to the problem it has created. Explainable AI is a major topic of research: if AI can break out of the black box and be open about how it produces its insights, this will make for more effective AI-human collaboration and make the experience more educational for the humans involved.25

Furthermore, since AI is trained on data, and as this data is often the product of human interactions and processes, it is effectively a repository of experience. Driven by this experience, it could be directed not just to solve problems but to coach and mentor.

Neither uncritically adopting technology offering short-term gains nor ignoring the very real opportunities is a sensible approach. There are paths to a productive and sustainable future with a beneficial fusion of AI and human skills, but we are responsible for carving them out.

Acknowledgment

The authors would like to thank Oliver Turnbull and Eric Piancastelli, of Arthur D. Little Catalyst, for their comments on an early iteration of this article.

References

1 US Bureau of Labor Statistics. “Business Sector: Labor Productivity (Output per Hour) for All Workers.” Federal Reserve Bank of St. Louis, 11 December 2024.

2 Monahan, Kelly, and Gabby Burlacu. “From Burnout to Balance: AI-Enhanced Work Models.” Upwork, 23 July 2024.

3 Autor, David. “Applying AI to Rebuild Middle Class Jobs.” Working Paper 32140, National Bureau of Economic Research (NBER), February 2024.

4 Ntsweng, Oteng, Wallace Chipidza, and Keith Carter. “Infusing Humanistic Values into Business as GenAI Shapes Data Landscape.” Amplify, Vol. 37, No. 5, 2024.

5 Schmarzo, Bill. “Unleashing Business Value & Economic Innovation Through AI.” Amplify, Vol. 37, No. 6, 2024.

6 Susskind, Richard, and Daniel Susskind. The Future of the Professions: How Technology Will Transform the Work of Human Experts. Oxford University Press, 2015.

7 Felten, Edward, Manav Raj, and Robert Seamans. “Occupational, Industry, and Geographic Exposure to Artificial Intelligence: A Novel Dataset and Its Potential Uses.” Strategic Management Journal, Vol. 42, No. 12, April 2021.

8 Cui, Zheyuan (Kevin), et al. “The Effects of Generative AI on High Skilled Work: Evidence from Three Field Experiments with Software Developers.” SSRN, 5 September 2024.

9 Migliorini, Sara, and João Ilhão Moreira. “The Case for Nurturing AI Literacy in Law Schools.” Asian Journal of Legal Education, 13 August 2024.

10 Jayatunga, Madura KP, et al. “How Successful Are AI-Discovered Drugs in Clinical Trials? A First Analysis and Emerging Lessons.” Drug Discovery Today, Vol. 29, No. 6, June 2024.

11 Johnson, Nicholas, et al. “Taking Control of AI: Customizing Your Own Knowledge Bots.” Prism, September 2023.

12 “Anticipation, Experimentation and AI: Design Trend Report.” Figma, accessed December 2024.

13 Sreenivasan, Aswathy, and M. Suresh. “Design Thinking and Artificial Intelligence: A Systematic Literature Review Exploring Synergies.” International Journal of Innovation Studies, Vol. 8, No. 3, September 2024.

14 van der Schaaf, Ben, et al. “Managing Clinical Trials During COVID-19 and Beyond.” Arthur D. Little, April 2020.

15 Mokyr, Joel. The Gifts of Athena: Historical Origins of the Knowledge Economy. Princeton University Press, 2004.

16 Harari, Yuval Noah. Nexus: A Brief History of Information Networks from the Stone Age to AI. Random House, 2024.

17 “Jobs of Tomorrow: Large Language Models and Jobs.” World Economic Forum/Accenture, September 2023.

18 Giles, Ian. “Pirate AI.” Eurozine, 12 August 2024.

19 Anderson, Caroline. “What Do Writers Think About AI?” Authors’ Licensing and Collecting Society, 26 June 2024.

20 Susskind and Susskind (see 6).

21 Cabitza, Federico, et al. “Rams, Hounds and White Boxes: Investigating Human-AI Collaboration Protocols in Medical Diagnosis.” Artificial Intelligence in Medicine, Vol. 138, April 2023.

22 Bucaioni, Alessio, et al. “Programming with ChatGPT: How Far Can We Go?” Machine Learning with Applications, Vol. 15, March 2024.

23 de Gregorio, Ignacio. “Apple Speaks the Truth About AI. It’s Not Good.” Medium, 23 October 2024.

24 Shumailov, Ilia, et al. “AI Models Collapse When Trained on Recursively Generated Data.” Nature, Vol. 631, July 2024.

25 Dwivedi, Rudresh, et al. “Explainable AI (XAI): Core Ideas, Techniques, and Solutions.” ACM Computing Surveys, Vol. 55, No. 9, January 2023.